Something has quietly shifted in how QA teams work.

Most of the testing now happens before we even log in. The suites run overnight, AI flags the failures, and the dashboards already look spotless.

It’s efficient, almost unsettling. The bugs that once tested our patience now surface in seconds, perfectly sorted by a system that never sleeps.

Speed isn’t what slows us down anymore. The real challenge begins once everything looks perfect.

Machines move fast. QAs Make Sense.

AI handles the mechanical side of testing well: regression runs, pattern recognition, accessibility scans. It does what it’s told, and it does it fast.

But quality still depends on judgment. Someone has to interpret what those results mean, see when a fix introduces new risk, or notice that a design decision makes a feature harder to use even if every test passes.

That layer of understanding, the bridge between automation and the real product, is still human territory.

QA has Moved Closer to the Product

Testing now happens inside the product conversation, not just after development ends. To verify anything properly, you need to understand why it exists, what problem it solves, and how it connects to the rest of the system.

AI can point to an error, but it can’t decide if that error matters to a user or to the business. That decision requires context.

Modern QA focuses less on the number of tests run and more on the relevance of what’s being tested. The closer you are to product intent, the better your testing decisions.

The Human in the Loop

Automation covers breadth. QA provides depth.

When an automated check fails, QA figures out if it’s a real defect or a false alarm. When nothing fails but the experience feels inconsistent, QA is the one who notices.

Keeping a human in the loop isn’t nostalgia for manual testing; it’s how we make sure all this precision still reflects real human experience.

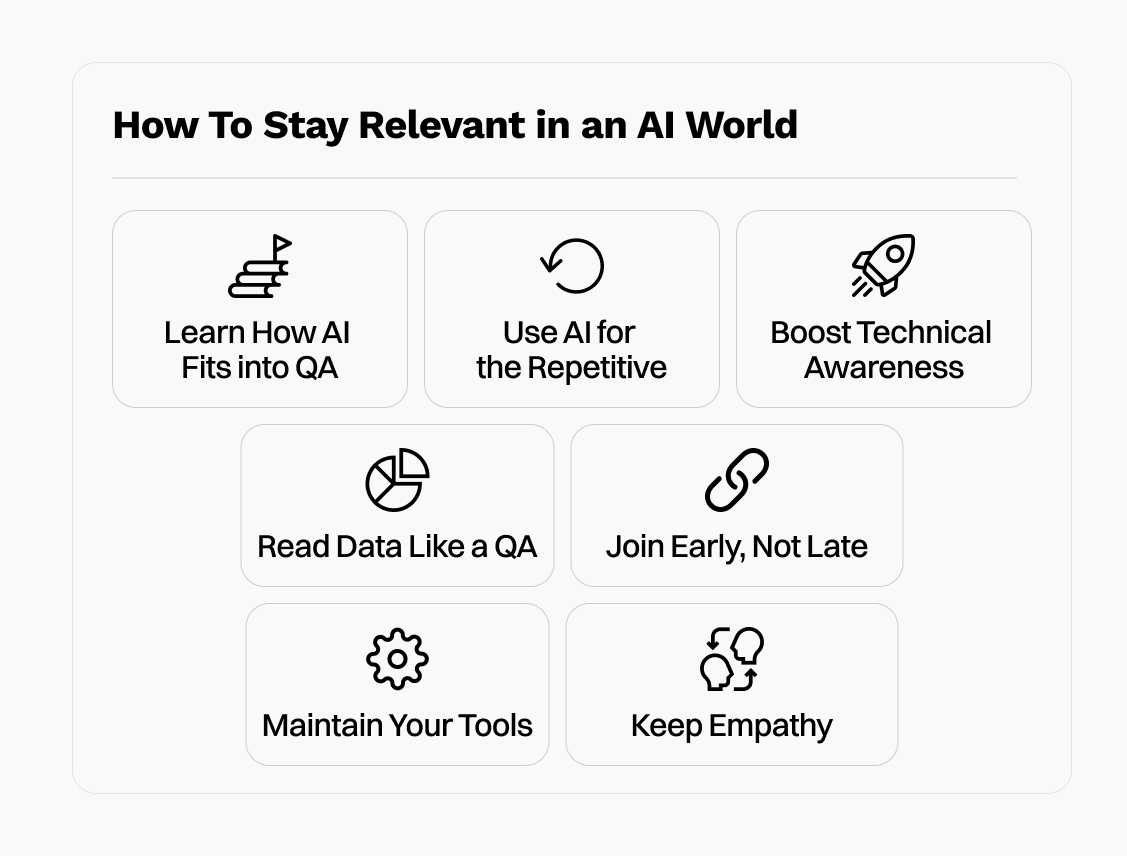

How To Stay Relevant in an AI World

So what does staying relevant actually look like? Here’s where to focus your time.

1. Learn how AI fits into QA

AI tools are becoming part of daily testing work: generating cases, analyzing logs, clustering failures. Learn what they do well and where they fall short. Use them for routine or repetitive tasks, but always review their output. Understanding the limitations of these tools makes you better at spotting real issues.

2. Use AI for the repetitive, keep people for the reasoning

Let AI handle setup work such as generating test outlines and organizing reports. The time you save should go into analysis, verification, and risk assessment. The balance is about using tools efficiently so human time is spent on work that needs interpretation.

3. Strengthen technical awareness

Know how your system is built. Understand the basics of APIs, databases, and deployment flows. You don’t need to write production code, but you should know how to trace a bug from the interface to the backend and explain what’s failing. The more you understand how things connect, the more valuable your feedback becomes.

4. Read data like a QA, not like a dashboard

Metrics are everywhere, but they don’t explain themselves. Learn to connect trends with actual behavior. When a crash rate increases, trace it back to a specific build or flow. When performance improves, confirm what changed. The ability to interpret data gives testing purpose beyond raw numbers.

5. Join early, not late

Quality decisions happen long before a build reaches QA. Sit in on planning sessions. Ask what success looks like for the feature. Call out grey areas before they turn into risk. It’s easier to prevent unclear behavior early than to report it later.

6. Maintain your tools as carefully as your tests

Automation and AI systems need maintenance like any other part of the product. Track flaky tests, false positives, and outdated scripts. When an AI classifier or model mislabels an issue, analyze why and correct the input. Controlled automation scales; neglected automation breaks quietly.

7. Keep empathy grounded in evidence

User perspective is still what separates QA from scripts. When something behaves correctly but still feels off, back it up with data or reproduction context. Combine empathy with proof. That mix of human observation and factual reasoning is what keeps testing credible.

A Role That Keeps Evolving

Curiosity and precision are still the foundation of good testing. The difference is where that effort goes. Understanding how AI systems work, how they make predictions, and how to question them has become part of the craft.

QA is no longer a stage in delivery. It’s an ongoing function that connects engineering decisions to real-world impact.

Closing the Loop

AI will keep getting sharper, more precise, more exhaustive, more relentless. But it still can’t tell when something feels off. It doesn’t pause at the awkward silence between clicks or notice the flicker of confusion on a user’s face. That’s where we come in.

The human in the loop gives the results context. We decide what matters, which bugs truly impact people, and which friction becomes frustration.

Because testing has never been just about passing or failing, it’s about sensing how a product feels in human hands.

The future of QA isn’t ruled by machines or guarded by humans. It’s built by both.